Publications

Fosco, C., Lahner, B., Pan, B., Andonian, A., Josephs, E., Lascelles, A., & Oliva, A. (2024). Brain Netflix: Scaling Data to Reconstruct Videos from Brain Signals. European Conference on Computer Vision (pp. 457-474). Cham: Springer Nature Switzerland. Paper

Lahner, B., Dwivedi, K., Iamshchinina, P., Graumann, M., Lascelles, A., Roig, G., Gifford, A.T., Pan, B., Jin, S., Murty, N.A.R., Kay, K., Oliva, A.†, and Cichy, R.† (2024). Modeling short visual events through the BOLD moments video fMRI dataset and metadata. Nature Communications, 15(1), 6241. Paper

Gifford, A. T., Lahner, B., Saba-Sadiya, S., Vilas, M. G., Lascelles, A., Oliva, A., Kay, K., Roig, G., and Cichy, R. M. (2023). The Algonauts Project 2023 Challenge: How the Human Brain Makes Sense of Natural Scenes. arXiv, 2301.03198. arXiv Paper ★ Website

Monfort, M., Ramakrishnan, K., Andonian, A., McNamara, B., Lascelles, A., Pan, B., Fan, Q., Gutfreund, D., Feris, R., and Oliva, A. (2021) Multi-Moments in Time: Learning and Interpreting Models for Multi-Action Video Understanding. IEEE Pattern Analysis and Machine Intelligence (PAMI), 44(12), 9434-9445. Paper ★ Website

Cichy, R.M., Dwivedi, K., Lahner, B., Lascelles, A., Iamshchinina, P., Graumann, M., Andonian, A., Murty, N.A.R., Kay, K., Roig, G., and Oliva A. (2021). The Algonauts Project 2021 Challenge: How the Human Brain Makes Sense of a World in Motion. arXiv, 2104.13714. arXiv Paper ★ Website ★ GitHub Code

Ramakrishnan*, K., Monfort*, M., McNamara, B., Lascelles, A., Gutfreund, D., Feris, R., and Oliva, A. (2019). Identifying Interpretable Action Units in Deep Networks. IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2019) Workshop on Explainable AI. Paper

Cichy, R. M., Roig, G., Andonian, A., Dwivedi, K., Lahner, B., Lascelles, A., Mohsenzadeh, Y., Ramakrishnan, K., and Oliva, A. (2019). The Algonauts Project: A Platform For Communication Between the Sciences of Biological and Artificial Intelligence. arXiv, 1905.05675. Paper ★ Website

Lascelles, A. and Bhattacharya, J. (2018). Neural Correlates of Crossmodal Correspondence Between Pitch and Visual Motion. MSc Thesis. Paper ★ Poster for Behavioral Data

Lascelles, A., Drake, J., and Garraffo, C. (2017). Realistic MHD Modeling of Wind-Driven Processes in Cataclysmic Variable-Like Binaries. MPhys Thesis. Paper ★ Video

Lascelles, A. (2012). Submarine Drag Reduction Study. BAE Systems Maritime Summer Internship. Paper

Projects

The Algonauts Project 2023

How the Human Brain Makes Sense of Natural Scenes

The 2023 edition of The Algonauts Challenge focused on explaining responses in the human brain

as participants perceive complex natural visual scenes. Through collaboration with the

Natural Scenes Dataset (NSD) team, this Challenge ran on the largest suitable

brain dataset available (brain responses from 8 human participants to in total 73,000 different visual scenes) opening new venues for data-hungry

modeling. The challenge was organized in partnership with the

Conference on Cognitive Computational Neuroscience (CCN).

At every blink our eyes are flooded by a massive array of photons — and yet, we perceive the visual world as ordered and meaningful.

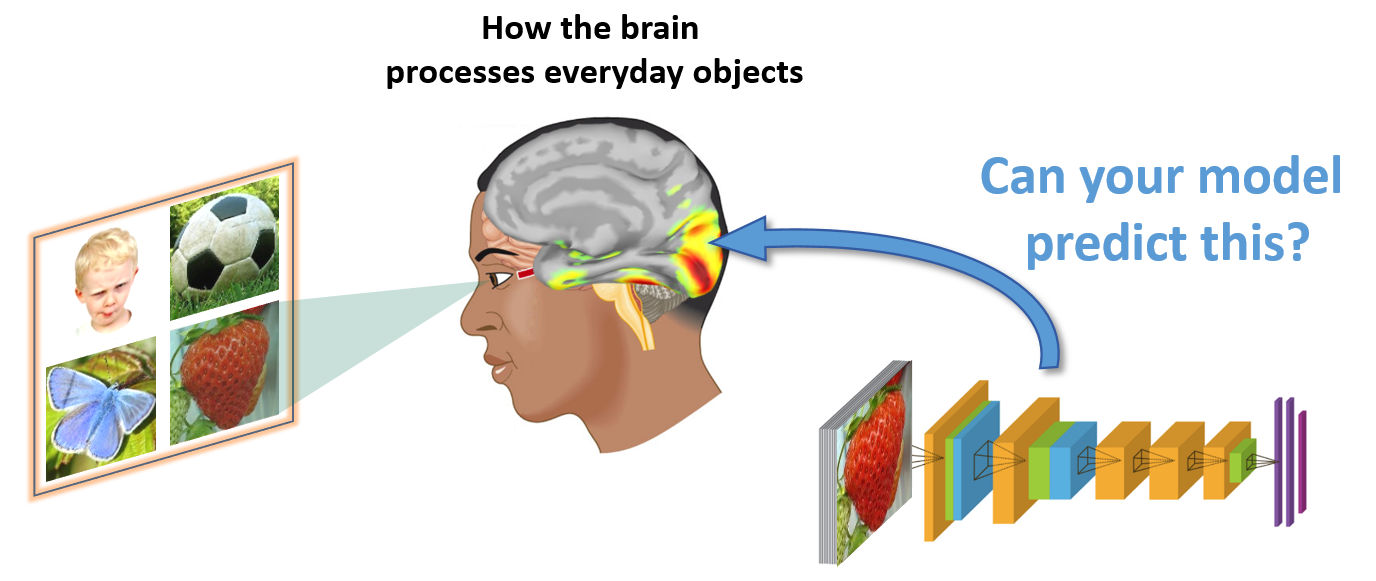

The primary target of the 2023 Challenge was predicting human brain responses to complex natural visual scenes. We posed the question: Given a set

of images, how well does your computational model account for the brain activations when a human viewed those images?

Learn more about Algonauts 2023 here.

Goal Grounding Project

Group collaboration between MIT and Facebook Reality Labs studying the goals of videos

The aim of this project was to collect annotations on egocentric (first-person) videos that have a specific goal which is being carried out.

This results in visual ground truth data for when a certain goal is taking place within a video. This would be useful for a number of different

applications, for example, an AI assistant predicting and helping with the next action/goal a human will encounter.

To do this, our team at MIT collaborated with a team at Meta to build an interface to collect data from participants online, selected suitable data sets

to sample from, collected the data using the Prolific platform, and analyzed the data. The interface was a useful online tool that allowed a user to quickly

select portions of video while watching, and was flexible enough to be applied to other research aims.

If you'd like to know more about this project please get in touch.

The Algonauts Project 2021

How the Human Brain Makes Sense of a World in Motion

The 2nd installment of The Algonauts Challenge was centered around video perception and event understanding,

and was organized in partnership with the Conference on Cognitive Computational Neuroscience (CCN).

The challenge focused on explaining responses in the human brain as subjects watched short video clips of everyday events.

For this release, in addition to building the website, I was also involved in the collection of the data set — creating the video data set (1102 3-second videos),

learning fMRI techniques, scanning 10+ participants, and making all the data available to the public.

Learn more about Algonauts 2021 here.

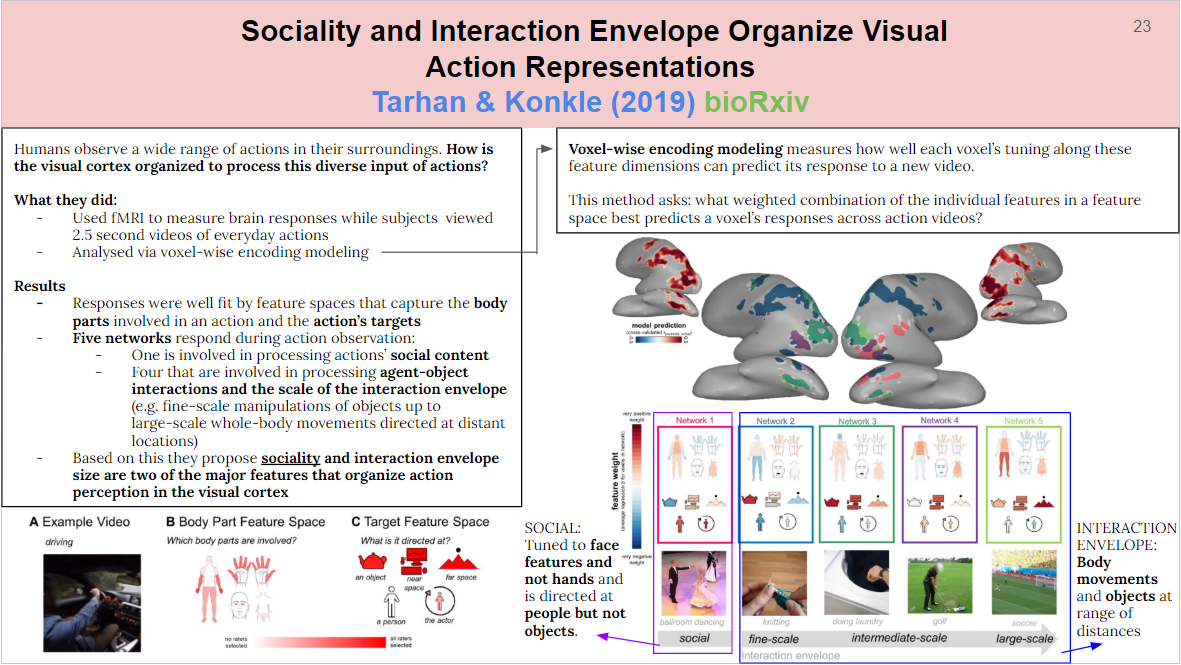

Computational Neuroscience Paper Summaries

I created one-slide summaries of 100+ computational visual neuroscience academic papers, and other resources. It was made so that you could quickly understand the gist of the paper/resources, and easily come back to it later to remember what it was about. They included a short section on the background, info on what the researchers did, what they found, and any other important information.

The Algonauts Project 2019

Explaining the Human Visual Brain: Workshop and Challenge

While at MIT, I helped to build The Algonauts Project —

an initiative bringing biological and artificial intelligence researchers together on a

common platform to exchange ideas and advance both fields. Inspired by the astronauts' exploration of space, "algonauts" explore human and artificial intelligence

with state-of-the-art algorithmic tools. Our first challenge and workshop,

"Explaining the Human Visual Brain", focused on building computer vision models that simulate how the brain sees and recognizes objects, a topic that

has long fascinated neuroscientists and computer scientists.

Across two competition tracks, fMRI and MEG, we gave participants a set of images consisting of everyday objects and the corresponding brain activity recorded

while human subjects viewed those images. Participants competed to devise computational models that best predicted the brain activity of a brand new set of images.

The winners were announced at a workshop that we organized at MIT, featuring guest speakers who are some of the leading experts in the field of computational

neuroscience of vision, from institutions such as Harvard, UC Berkeley, Columbia, FU Berlin, and DeepMind.

Learn more about The Algonauts Project and the 2019 edition here,

on the webpages that I built to explain and distribute the challenges and workshops.

The data set consisted of a set of 92 and 118 images, for which we also provided the fMRI and MEG brain data associated with humans viewing these images.

MSc Thesis (2018):

Neural Correlates of Crossmodal Correspondence Between Pitch and Visual Motion

Supervisor: Prof Joydeep Bhattacharya FRSA

Our senses are not independent entities — instead, they work together to build our realities. When one sense influences another, we call this

phenomenon crossmodal correspondence. I studied a particular crossmodal correspondence in which ascending/descending pitches as well as the

spoken words "up"/"down" (auditory domain) were able to bias our perception of visual motion (visual domain).

I learned MATLAB so I could design and conduct an EEG experiment in which the participant judged the direction of motion of an ambiguous (same

upwards and downwards components) moving grating whilst listening to the auditory stimuli. Using behavioural and ERP analysis, I showed that

auditory stimuli were enough to bias peoples' perception of visual motion — an ascending tone caused more people to perceive upwards motion,

when in fact there was no overall movement in either direction. This was the first time that this effect was studied using brain imaging techniques.

Read my MSc thesis here or

view my poster for the behavioural data here.

Speed Reader

Anvil Hack 2018

While at Goldsmiths, I participated in a number of different hackathons including Anvil Hack. During this event, I worked in a team of 3 to make a platform that allows you to convert text into a form that was much more quickly readable. We were inspired by this advert by Honda. Perhaps in the future, devices that use this technology might be commonplace.

The magnetic field lines are represented in grey and wind speed by the red/black color map. The blue color map shows mass density (effectively tracing accretion).

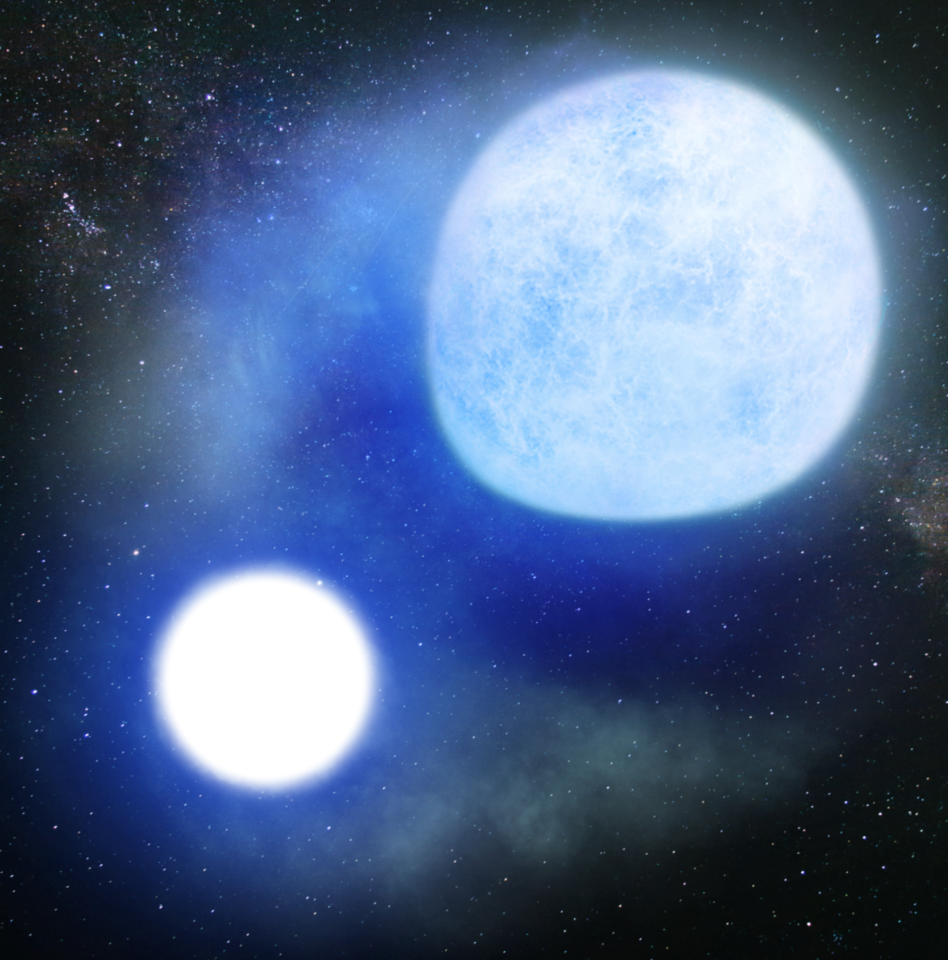

MPhys Thesis (2017):

Realistic MHD Modeling of Wind-Driven Processes in Cataclysmic Variable-Like Binaries

Supervisors: Dr Cecilia Garraffo and Dr Jeremy Drake

When main sequence stars such as our Sun orbit a star known as a white dwarf, they can form something called a cataclysmic variable-like binary. The

charged ions contained within the magnetic fields of these stars creates a complex magnetic wind structure as the two bodies orbit each other.

When this wind structure decouples from the system it carries away mass and angular momentum, which impacts how the stars spin and how their orbits evolve.

Using 3-D magnetohydrodynamic simulations, I explored the effects of orbital separation and magnetic field configuration on these mass and angular momentum

loss rates for binary systems. The current models didn't include the magnetic fields of the stars. It was hypothesized that including the magnetic field of

the white dwarf in the binary system could alter these rates. I found that when including these magnetic fields, the mass and angular momentum loss rates

dropped by factors of 4 and 6 respectively, suggesting that the current models for these systems should be amended to include the magnetic field.

Read my MPhys thesis here

or watch a 20-min video of my thesis defense here, given at the Harvard-Smithsonian

Center for Astrophysics High Energy Seminar.

Hack @ Brown 2017

Saguaro: An open source, extensible home automation platform

I attended a hackathon at Brown University where I worked within a team of 5 to design and build an IOT platform called Saguaro. Saguaro allows

hardware devices to be controlled by a simple web interface.

Activating hardware in your home — lights, windows, anything you can run a wire to — is as simple as pushing a button. Additionally,

Saguaro learns your schedule, and over time is able to anticipate your actions and automate your home for you.

Bottom image credit: Lynette Cook / extrasolar.spaceart.org

What Do Planetary Orbits around Binary Star Systems Look Like?

Computing Project #1 (2016)

Incredibly, almost half of the star systems that we see in the sky contain multiple stars! This project aimed to simulate planetary orbits around

a binary (two) star system. This is important to study because such simulations could be used to detect habitable planets outside our solar system (exoplanets).

For life to exist, the planet on which it occurs must keep a stable orbit over long time-scales. This is similar to the novel "The Three-Body Problem"

by Liu Cixen, now made into a Netflix series.

To find stable orbits, I used a Runge-Kutta approach to solve the differential equations involved in such a 3-body system and tested various initial

velocities and separations. I found many p- and s-type orbits (the two species of stable planetary orbit), as well as some chaotic orbits,

and discovered whether they possessed a habitable zone where the planet could sustain life.

Read my report on this project here.

The Structure of White Dwarf Stars

Computing Project #2 (2016)

White dwarf (WD) stars are extremely dense objects — the only thing preventing them from collapsing into a black hole is a force called

electron degeneracy pressure, which

has to do with the fact that electrons cannot be pushed close enough to occupy the same energy state. WDs are very important within astronomy

— they appear in many areas of study, including galaxy formation, stellar evolution, and supernovae. Since over 95% of the stars we

observe will end their lives as WDs, knowing how they function and how their parameters behave is crucial.

In this project, I created a model for determining the mass-radius variation in WDs, and ultimately found the critical mass of a WD

(Chandrasekhar limit), beyond which the

electron degeneracy pressure can no longer support the star and it must collapse into a neutron star or a black hole.

Top image credit: Kevin Burdge / Article

How Do You Find the Period of an Eclipsing Binary Star System?

Astrophysics Project at the Teide Observatory, Tenerife (2015)

As part of my masters degree, we spent a week in Tenerife collecting data with professional telescopes to conduct an experiment

on an area of astrophysics of our choosing. I chose to research eclipsing binaries — these are a 2-star system consisting of a white

dwarf orbiting a main sequence star (similar to our Sun). The white dwarf is much more massive and therefore accretes (steals) matter

from the other star.

When we view them using telescopes it appears to be just a single point of light because we are so far away.

But since the two stars are orbiting each other, if viewed from the right angle (plane of rotation), one star will periodically pass in front of the other, resulting in a dimming of the total

brightness. This dimming of light can be measured, and from that we can work out the period (time for the stars to completely orbit

one another). In this project, I measured the dimming of the binary over time to work out the period (~2 hrs). If you are interested

in learning more about eclipsing binaries, this is a great resource!

Read my report on this project here.

How Can Submarines Reduce Drag?

BAE Systems Summer Internship (2012)

Before I started university, I spent a summer internship at BAE Systems Maritime completing a project focusing on how to reduce physical drag on the

new class of nuclear submarines.

I investigated how changes to the bow, control surfaces, fins, and body of the submarine would affect the hydrodynamics of the submarine. I also

investigated if it would be possible to inject polymers or micro-bubbles to change the way the water flowed over the submarine's surface. Finally,

I looked into more radical solutions such as supercavitation — a technology used in some underwater missiles that involves boiling the water

in front of the supercavitating body to reduce friction drag.

Read the report here.

Copyright © Alex Lascelles. Last Updated Jul 2025.